Section 9: Decision Trees

Sections 9 and 10 are on tree-based methods. There are three main methods:

- Decision Trees (Section 9)

- Random Forests (Section 10)

- Boosted Trees (Section 10)

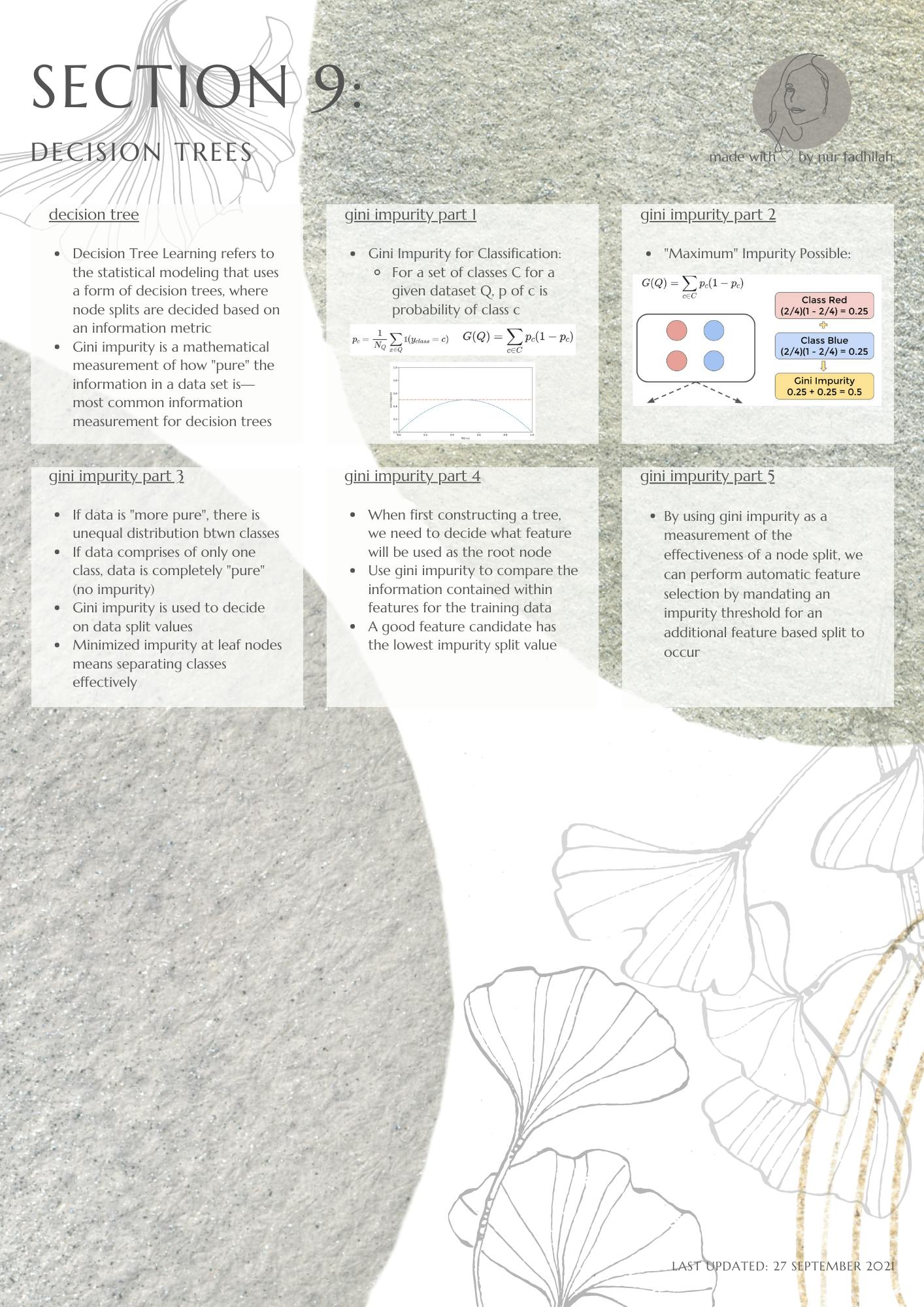

Each of these methods stems from the basic decision tree algorithm. Fundamentally, tree-based methods rely on the ability to split data based on information from features. Require a mathematical definition of information and the ability to measure it.

Classification and Regression Tree (CART) introduces many concepts:

- Cross validation of Trees

- Pruning Trees

- Surrogate Splits

- Variable Importance Scores

- Search for Linear Splits

Limitations of a single decision tree:

- Single feature for root node

- Splitting criteria can lead to some features not being used

- Potential for overfitting to data

References:

- An Introduction to Statistical Learning (Download free pdf)

- Jose Portilla's 2021 Python for Machine Learning & Data Science Masterclass